NTT Bench — BabyBear vs Goldilocks (ZK Hack S3M2)

TL;DR — I implemented a generic radix-2 Cooley–Tukey NTT in Rust over two STARK-style fields (BabyBear and Goldilocks), added a parallel version using

rayon, and benchmarked them withcriterionfor sizes up to2^16. On my 4-core Intel CPU, BabyBear is consistently faster per point than Goldilocks (fewer bits, simpler multiplications, better cache density), and parallel NTT only wins for larger domains where the work amortizes the thread scheduling overhead. All code lives instark-ntt-benchand is fully reproducible.

1. Motivation — from ZK Hack whiteboard to Rust code

- ZK Hack Whiteboard session with Jim Posen: “High-Performance Engineering for SNARKs (ZK Hack S3M2)”.

- One of the main messages: a prover is a numerical engine. In modern SNARK/STARK stacks, the hot loops are:

- small-field multiplications,

- NTTs / FFTs,

- polynomial commitment openings,

- hashes.

- Rather than jump directly to GPUs or FPGAs, I wanted to consolidate basic concepts that will be needed overall many protocols:

- implement a minimal yet realistic NTT backend,

- run it on two fields heavily used in practice,

- connect the results to his ideas on parallelism and hardware-aware design.

2. Small prime fields for STARKs

If you look at projects like ethproofs.org, the whole point is to make proving fast enough for near real-time Ethereum verification. Under the hood, that means running huge numbers of NTTs and field operations as efficiently as possible on commodity hardware. Fields like BabyBear and Goldilocks, and the kind of micro-benchmarks in this post, are small-scale versions of the performance questions those systems face.

2.1 BabyBear and Goldilocks in words

-

BabyBear (≈ 31-bit prime,

p = 2³¹ − 2²⁷ + 1):- Fits inside a 32-bit lane with headroom.

- Plays nicely with SIMD over 32-bit integers (NEON, SSE, AVX).

- Has good 2-adicity (large power of two dividing

p−1), which is great for radix-2 NTTs. - Popular in STARK/zkVM stacks where you want massive, parallelizable arithmetic on CPU & GPU.

-

Goldilocks (

p = 2⁶⁴ − 2³² + 1):- A 64-bit prime engineered so that reductions can be done efficiently with 128-bit intermediates and a nice reduction rule.

- Attractive when:

- your CPU has very strong 64-bit integer support,

- you want a lot of dynamic range per field element,

- you may later target 64-bit-oriented hardware (e.g., GPU kernels that like 64-bit lanes).

2.2 Why “small” fields?

- Throughput is about how many field multiplies/adds we can do per second.

- For a fixed machine:

- 32-bit fields like BabyBear:

- use smaller registers, often allowing more parallelism per SIMD instruction;

- pack more elements into caches (L1/L2) → better locality during NTTs;

- have cheaper 32×32→64 multiplications.

- 64-bit fields like Goldilocks:

- need 128-bit intermediates for multiplication,

- each butterfly does “heavier” work,

- fewer elements fit in caches for the same domain size.

- 32-bit fields like BabyBear:

- So we get a trade-off:

- BabyBear → more operations per second, but less range per element.

- Goldilocks → fewer ops per second but larger dynamic range, sometimes nicer algebraic properties.

In the talk, Posen basically says: “If you want a fast prover, your choice of field is a hardware decision.” My tiny benchmark is a local, toy confirmation of that: BabyBear feels “lighter” on a CPU than Goldilocks, exactly because the machine is optimized for 32-bit/64-bit lanes and small integer muls.

2.3 Rust types — putting the fields in code

Show shortened versions of our field structs (omit some boilerplate):

// BabyBear: 31-bit prime field (2^31 - 2^27 + 1).

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub struct BabyBear(pub u32);

impl BabyBear {

pub const MOD: u32 = 2_013_265_921;

#[inline]

pub fn new(x: u64) -> Self {

BabyBear((x % Self::MOD as u64) as u32)

}

#[inline]

pub fn add(self, other: Self) -> Self {

BabyBear::new(self.0 as u64 + other.0 as u64)

}

#[inline]

pub fn sub(self, other: Self) -> Self {

BabyBear::new(self.0 as u64 + Self::MOD as u64 - other.0 as u64)

}

#[inline]

pub fn mul(self, other: Self) -> Self {

BabyBear::new(self.0 as u64 * other.0 as u64)

}

}

// Goldilocks: 64-bit prime (2^64 - 2^32 + 1).

#[derive(Copy, Clone, Debug, PartialEq, Eq)]

pub struct Goldilocks(pub u64);

impl Goldilocks {

pub const MOD: u64 = 0xFFFF_FFFF_0000_0001;

#[inline]

pub fn new(x: u128) -> Self {

Goldilocks((x % (Self::MOD as u128)) as u64)

}

#[inline]

pub fn add(self, other: Self) -> Self {

Goldilocks::new(self.0 as u128 + other.0 as u128)

}

#[inline]

pub fn sub(self, other: Self) -> Self {

Goldilocks::new(self.0 as u128 + Self::MOD as u128 - other.0 as u128)

}

#[inline]

pub fn mul(self, other: Self) -> Self {

Goldilocks::new((self.0 as u128) * (other.0 as u128))

}

}They both share a NttField trait to abstract a general field class.

3. NTT as the hot loop

3.1 The FFT/NTT trick in one paragraph

The DFT is defined as:

Multiplying big polynomials or evaluating them on many points is O(n²), too slow for massive polynomials.

- FFT trick: switch representation from coefficients to values at roots of unity (evaluation form). In that form:

- Convolution/multiplication becomes point-wise multiply.

- Evaluation/interpolation both cost O(nlogn) via divide-and-conquer.

- NTT = “FFT but over a finite field.” Same trick, just using field roots of unity instead of complex roots. That makes it compatible with SNARK/STARK arithmetic.

Where do we find these in SNARK/STARTs?

- Polynomial commitments (PLONK/KZG family): build large polynomials (selector/constraint/quotient).

- STARKs (FRI): repeatedly check that a function is close to a low-degree polynomial. Prover must evaluate on huge domains.

- Merkle-ized or FRI codewords: evaluate codewords via NNTs.

- Polynomial multiplications everywhere.

3.2 The NttField abstraction

I defined a minimal trait for fields:

pub trait NttField: Copy + Clone {

fn from_u64(x: u64) -> Self;

fn zero() -> Self;

fn one() -> Self;

fn add(self, rhs: Self) -> Self;

fn sub(self, rhs: Self) -> Self;

fn mul(self, rhs: Self) -> Self;

fn pow(self, exp: u64) -> Self;

fn modulus() -> u64;

}This hides the concrete field and lets the NTT code be generic. Implementation is trivial for BabyBear / Goldilocks (shown above).

3.3 Bit-reversal and iterative Cooley–Tukey

Cooley–Tukey decomposition groups even/odd indices, then recursively splits them; bit-reversal lets you do that iteratively in-place.

We have an array a of length n, and n is a power of two: n = 2^k.

For each index i (0 to n-1), write i in binary with exactly k bits, reverse those bits, and call that number rev(i).

pub fn bit_reverse<T>(a: &mut [T]) {

let n = a.len();

assert!(n.is_power_of_two(), "NTT length must be a power of two");

let mut j: usize = 0;

// Standard iterative bit-reversal algorithm.

for i in 0..n {

if i < j {

a.swap(i, j);

}

let mut bit = n >> 1;

while j & bit != 0 {

j ^= bit;

bit >>= 1;

}

j |= bit;

}

}Example for n = 8 (k = 3 bits):

| i (dec) | i (bin) | rev(i) (bin) | rev(i) (dec) |

|---|---|---|---|

| 0 | 000 | 000 | 0 |

| 1 | 001 | 100 | 4 |

| 2 | 010 | 010 | 2 |

| 3 | 011 | 110 | 6 |

| 4 | 100 | 001 | 1 |

| 5 | 101 | 101 | 5 |

| 6 | 110 | 011 | 3 |

| 7 | 111 | 111 | 7 |

We want to do that in-place, swapping entries, without computing rev(i) from scratch each time. The question is how do we move j through the sequence 0, 4, 2, 6, 1, 5, 3, 7 without recomputing bit-reversed(i) each time?

3.4 Forward and inverse NTT

A radix-2 decimation-in-time (DIT) FFT is the simplest and most common form of the Cooley–Tukey algorithm, although highly optimized Cooley–Tukey implementations typically use other forms of the algorithm. Radix-2 DIT divides a DFT of size N into two interleaved DFTs (hence the name "radix-2") of size N/2 with each recursive stage.

- Forward NTT → “frequency space”

- Inverse NTT → back to “time space”

- Both over BabyBear & Goldilocks.

/// Iterative radix-2 NTT:

/// 1. Bit-reversal permutation of the input.

/// 2. Loop over stages `len = 2, 4, 8, ...`:

/// - Precompute stage twiddles ω^(step * j).

/// - Perform butterflies:

/// `u = a[i]; v = a[i + len/2] * twiddle; a[i] = u + v; a[i + len/2] = u - v`.

pub fn ntt_cooley_tukey<F: NttField>(a: &mut [F], omega: F) {

let n = a.len();

assert!(n.is_power_of_two());

// 1. bit-reverse reordering (aligns data for iterative butterflies)

bit_reverse(a);

// 2. Cooley–Tukey stages

let mut len = 2;

while len <= n {

let half = len / 2;

let step = n / len;

// For this stage, the base twiddle is w^{step}.

// We can precompute w_len = ω^{step} and then walk powers: 1, w_len, w_len^2, ...

let w_len = omega.pow(step as u64);

// Process blocks of size `len`

let mut start = 0;

while start < n {

let mut w = F::one(); // current twiddle in this block

for j in 0..half {

let u = a[start + j]; // "E_k"

let v = a[start + j + half]; // "O_k"

let t = v.mul(w); // w^j * O_k (twiddle multiply)

// Butterfly:

// X_k = E_k + w^j * O_k

// X_{k+N/2} = E_k - w^j * O_k

a[start + j] = u.add(t);

a[start + j + half] = u.sub(t);

w = w.mul(w_len); // move to next twiddle power in this block

}

start += len;

}

len <<= 1;

}

}

/// In-place inverse NTT.

///

/// We reuse the forward Cooley–Tukey but with ω^{-1} and then multiply

/// by n^{-1} so that NTT^{-1}(NTT(a)) = a.

pub fn intt_cooley_tukey<F: NttField>(a: &mut [F], omega: F) {

let n = a.len();

assert!(n.is_power_of_two());

// ω^{-1} = ω^{n-1} because ω^n = 1

let omega_inv = omega.pow((n as u64) - 1);

// Forward NTT with ω^{-1}

ntt_cooley_tukey(a, omega_inv);

// Multiply by n^{-1} (mod p)

let n_inv = F::from_u64(n as u64).pow(F::modulus() - 2); // Fermat's little theorem

for x in a.iter_mut() {

*x = x.mul(n_inv);

}

}4. Parallelism on CPU

4.1 Why NTTs are "embarrassingly parallel"

Each butterfly combines two numbers with a twiddle, no data dependency across butterflies in the same stage.

For each stage, the array is split into blocks of size len, being each one independent, so it is a perfect fit for rayon::par_chunks_mut(len).

Tie back to Posen’s talk: feeding the pipelines, keeping ALUs busy.

4.2 My rayon version

I implemented a basic in-place Cooley–Tukey NTT using rayon, using the same strategy as the serial version shown above, splitting the array at each stage into disjoint chunks of size len, and then processing each one independently with the same butterfly pattern:

pub fn ntt_parallel<F>(a: &mut [F], omega: F)

where

F: NttField + Send + Sync,

{

let n = a.len();

assert!(n.is_power_of_two());

println!("Rayon threads: {}", rayon::current_num_threads());

bit_reverse(a);

let mut len = 2;

while len <= n {

let half = len / 2;

let step = n / len;

let w_len = omega.pow(step as u64);

a.par_chunks_mut(len).for_each(|chunk| {

let mut w = F::one();

for j in 0..half {

let u = chunk[j];

let v = chunk[j + half].mul(w);

chunk[j] = u.add(v);

chunk[j + half] = u.sub(v);

w = w.mul(w_len);

}

});

len <<= 1;

}

}5. Benchmarks — BabyBear vs Goldilocks, serial vs parallel

5.1 Setup

I benchmarket it directly in my old laptop, that has 4 cores, 8 threads.

RUSTFLAGS="-C target-cpu=native -C opt-level=3" cargo benchFramework includes criterion, StdRng, sizes n = 2^10, 2^12, 2^14, 2^16.

This gives a concrete, numeric feel to the “small field vs bigger field” discussion from §2.2.

5.2 BabyBear vs Goldilocks timings

For each size, I measured the mean time per NTT in microseconds.

Serial:

Here are the measured mean latencies (µs) per full NTT on my 4-core laptop:

| n | BabyBear (µs) | Goldilocks (µs) | Ratio (Goldilocks / BabyBear) |

|---|---|---|---|

| 1024 | 21.998 | 351.350 | 15.98× slower |

| 4096 | 112.915 | 1939.561 | 17.17× slower |

| 16384 | 783.758 | 8038.016 | 10.26× slower |

| 65536 | 2491.370 | 40766.832 | 16.36× slower |

On my machine:

- Goldilocks is ≈10–17× slower than BabyBear for the same domain size.

- The ratio stays roughly stable as

ngrows. Both algorithms areO(n log n), but each Goldilocks butterfly is “heavier” (64-bit field + 128-bit multiplies). - BabyBear fits cleanly into 32-bit lanes, its multiplications use cheap 32×32→64 ops, and more elements fit into L1/L2 cache. Goldilocks has half the cache density and more expensive arithmetic.

- So even though the algorithm is identical, the per-operation hardware cost creates a large, consistent gap.

This is a concrete, numeric confirmation of the “small field vs bigger field” discussion from §2.2.

Parallel:

| n | BabyBear parallel | Goldilocks parallel | Ratio (Goldilocks / BabyBear) |

|---|---|---|---|

| 1024 | 188.795 | 455.785 | 2.41× slower |

| 4096 | 342.691 | 1026.306 | 2.99× slower |

| 16384 | 1149.353 | 3811.337 | 3.31× slower |

| 65536 | 4876.128 | 20755.685 | 4.26× slower |

Parallelism changes the picture:

- The Goldilocks/BabyBear gap shrinks from ≈15× in the serial case to ≈2–4× in the parallel case.

- At small

n, the runtime is dominated by thread scheduling and cache traffic, so the raw cost of a single butterfly matters less. - As

ngrows, arithmetic dominates again and the ratio creeps up (from ≈2.4× atn=1024to ≈4.2× atn=65536).

This is what you would expect if the machine is first fighting overhead, and only later saturating the ALUs.

5.3 Speedup

We can also look at parallel vs serial directly:

| n | BabyBear speedup (parallel / serial) | Goldilocks speedup (parallel / serial) |

|---|---|---|

| 1024 | 0.11× (slower) | 0.77× (slower) |

| 4096 | 0.33× (slower) | 1.89× faster |

| 16384 | 0.68× (slower) | 2.11× faster |

| 65536 | 1.96× faster | 1.96× faster |

Key takeaways:

- For

n = 1024, parallel NTT is actually much worse:

BabyBear becomes ~9× slower because the work per stage is tiny and Rayon overhead dominates. - Goldilocks starts to benefit earlier (from

n = 4096) because each butterfly is heavier, so there is more work to amortize scheduling and cache misses. - At

n = 65536, both fields reach a clean ≈2× speedup on a 4-core CPU, which is about what you’d expect when you can finally keep all cores busy with real work.

This matches Posen’s message: parallelism only makes sense once butterflies dominate, not before.

For small problem sizes, the overhead of thread management and memory traffic outweighs the benefits of SIMD/parallel execution.

Only as data sizes grow (n ≥ 16k) do we see clean speedups and hardware concurrency matches theory.”

5.4 Connecting to Jim Posen’s “real-time proving”

NTTs in real provers operate on domains orders of magnitude larger (2^20, 2^23, …).

Those domains are large enough that parallelism is essential:

- one prover run may perform thousands of NTTs,

- each NTT may operate on millions of points.

My toy benchmark is a microcosm of that: as soon as n is big enough, parallel NTT starts looking like a win.

Hardware consequence:

Real STARK provers run NTTs at 2¹⁸–2²⁴ points, so they sit well into the “parallel is worth it” regime, and field choice becomes a hardware decision:

- choose BabyBear-like fields for raw throughput,

- choose Goldilocks-like fields for larger dynamic range and 64-bit oriented pipelines,

- select SIMD width, cache model, and pipeline depth accordingly.

6. Future work / what I didn’t do

This post focuses on foundational building blocks. As I scale up these concepts, I plan to explore:

- Binary fields and Binius/Binius64 (additive NTTs, binary trees).

- SIMD: actually pack BabyBear into 128-/256-bit vectors and do lane-wise butterflies.

- GPUs: write CUDA/Metal kernels where each warp handles a chunk.

- FPGAs / ASICs: pipeline butterflies and twiddle multiplication à la Jim Posen.

- Integrate with a real STARK library (e.g., FRI, polynomial commitments) and benchmark full proving time.

7. Repo and how to run it

The code for this post lives at: https://github.com/reymom/ntt-bench.

To reproduce the benchmarks:

git clone ...

cd stark-ntt-bench

RUSTFLAGS="-C target-cpu=native -C opt-level=3" cargo benchTo sanity-check the NTT/INTT over both fields:

cargo run --bin mainWhich prints:

BabyBear before NTT: [...]

BabyBear after NTT: [...]

Recovered? [0,1,2,3,4,5,6,7]

Goldilocks before NTT: [...]

Goldilocks after NTT: [...]

Recovered? [0,1,2,3,4,5,6,7]References

Stay Updated

Get notified when I publish new articles about Web3 development, hackathon experiences, and cryptography insights.

You might also like

Cryptography — What makes a Hash ZK-Friendly (ZK Hack S3M1)

Practical Learnings from ZK Hack with JP Aumasson with hands-on benchmarks: SHA-256/512, BLAKE3, Poseidon. What does 'ZK-friendly' really mean?

Crescent Bench Lab: Measuring ZK Presentations for Real Credentials (JWT + mDL)

A small Rust lab that vendors microsoft/crescent-credentials, generates Crescent test vectors, and benchmarks zksetup/prove/show/verify across several parameters — including proof sizes and selective disclosure variants.

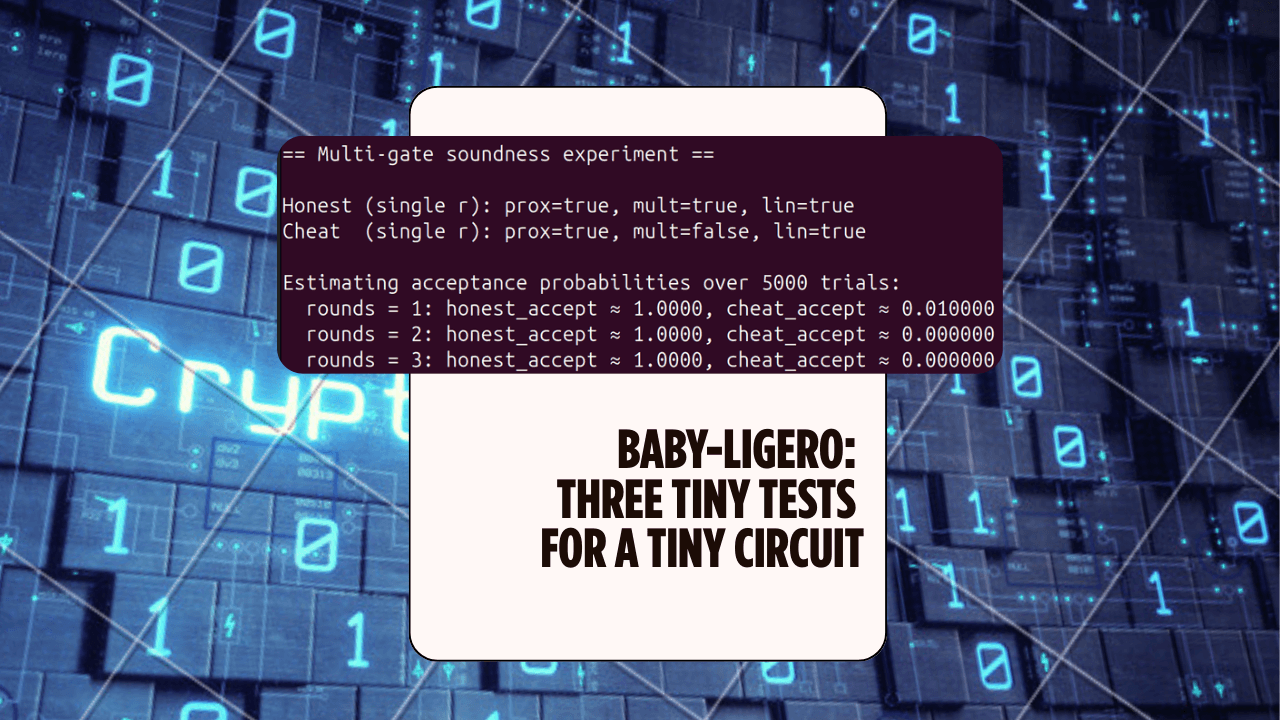

Baby-Ligero: Three Tiny Tests for a Tiny Circuit — ZK Hack S3M5

A mini Rust lab that implements a baby version of Ligero's three tests — proximity, multiplication, and linear — for a tiny arithmetic circuit, and uses them to see soundness amplification in action.