DeFi Bots Series — Part 7: The Monitor Test Saga (Making the Monitor Debuggable)

Continuing from Part 7: The Monitor Test Saga (where we stress-tested a one-sided, USDC-anchored LP strategy, wired TVL/fees gates, and introduced lineage PnL), this chapter is about refactors that made the whole machine easy to reason about. Fewer flags, clearer data flow, single sources of truth, and tests that catch the dumb stuff before Solscan does.

One source of truth for lineage PnL (+ HODL & IL)

Problem PnL/HODL math lived in two places (getLineageCloseSummaryFromFlows and getLineageLedger) and drifted.

Solution. Centralize into getLineageRollup that (a) prices every flow using its row price and (b) optionally computes HODL/IL at a provided mark (current or close-time).

export type LineageRollup = {

rows: number;

hasLedger: boolean;

// row-priced rollup

depositsUsd: number;

withdrawalsUsd: number;

realisedFeeUsd: number;

costBasisUsd: number; // deposits - withdrawals

realisedPnlUsd: number; // withdrawals + fees - deposits

// token sums for DEPOSIT/WITHDRAWAL (UI units)

depAUi: number;

depBUi: number;

withdrawnAUi: number;

withdrawnBUi: number;

// marks (optional)

hodlUsd?: number; // depAUi*markA + depBUi*markB

proceedsMarkedUsd?: number; // withdrawnAUi*markA + withdrawnBUi*markB

ilUsdMarked?: number; // proceedsMarkedUsd - hodlUsd

ilUsdRow?: number; // withdrawalsUsd - hodlUsd (row vs HODL)

// handy ratios to avoid UI math

realisedPctOnDeposits: number; // realisedPnlUsd / depositsUsd * 100

};Note on IL sign. We report IL as

proceedsMarkedUsd - hodlUsd. Negative = underperform HODL; positive = outperform. If you want the “gap to HODL” with the opposite sign, use-ilUsdMarked.

Thus, we merged the two old and redundant functions into getLineageRollup, simplifying its calculations and outputs so it can be properly

reused in both the monitoring of an active position and in the report for a closed one.

import type { SupabaseClient } from "@/infra/supabase/SupabaseClient";

import type { LineageRollup } from "./types";

export async function getLineageRollup(params: {

storage: SupabaseClient;

userId: number;

poolAddress: string;

lineageId: string;

// Pool orientation + decimals

tokenX: string; // pool token X mint

tokenY: string; // pool token Y mint

decX: number;

decY: number;

// optional marks (current or close-time price)

markAUsd?: number; // if given → hodl_usd computed

markBUsd?: number;

}): Promise<LineageRollup> {

// inputs: storage & pool orientation + optional marks

// ---------- Ledger over lineage ----------

const flows = await storage.flow.getFlowsByLineage(

userId,

poolAddress,

lineageId

);

for (const f of flows) {

// Map A/B in the flow to pool X/Y to apply proper decimals & prices

const rowDecA = f.token_a_mint === tokenX ? decX : decY;

const rowDecB = f.token_b_mint === tokenY ? decY : decX;

const aUi = Number(f.amount_a) / 10 ** rowDecA;

const bUi = Number(f.amount_b) / 10 ** rowDecB;

const hasA = Math.abs(aUi) > 0;

const hasB = Math.abs(bUi) > 0;

const priceA = f.price_a != null ? Number(f.price_a) : NaN;

const priceB = f.price_b != null ? Number(f.price_b) : NaN;

if (hasA && !Number.isFinite(priceA)) {

throw new Error(

`row price_a missing/invalid for flow id=${(f as any).id ?? "?"}`

);

}

if (hasB && !Number.isFinite(priceB)) {

throw new Error(

`row price_b missing/invalid for flow id=${(f as any).id ?? "?"}`

);

}

const usd = (hasA ? aUi * priceA : 0) + (hasB ? bUi * priceB : 0);

if (f.event_type === "DEPOSIT") {

depositsUsd += usd;

depAUi += aUi;

depBUi += bUi;

} else if (f.event_type === "WITHDRAWAL") {

withdrawalsUsd += usd;

withdrawnAUi += aUi;

withdrawnBUi += bUi;

} else if (f.event_type === "FEE_CLAIM") {

realisedFeeUsd += usd;

}

}

const costBasisUsd = depositsUsd - withdrawalsUsd;

const realisedPnlUsd = withdrawalsUsd + realisedFeeUsd - depositsUsd;

const realisedPctOnDeposits =

depositsUsd > 0 ? (realisedPnlUsd / depositsUsd) * 100 : 0;

const out: LineageRollup = {

rows: flows.length,

hasLedger: flows.length > 0,

depositsUsd,

withdrawalsUsd,

realisedFeeUsd,

costBasisUsd,

realisedPnlUsd,

depAUi,

depBUi,

withdrawnAUi,

withdrawnBUi,

realisedPctOnDeposits,

};

if (Number.isFinite(markAUsd) && Number.isFinite(markBUsd)) {

const hodlUsd =

depAUi * (markAUsd as number) + depBUi * (markBUsd as number);

const proceedsMarkedUsd =

withdrawnAUi * (markAUsd as number) + withdrawnBUi * (markBUsd as number);

out.hodlUsd = hodlUsd;

out.proceedsMarkedUsd = proceedsMarkedUsd;

out.ilUsdMarked = proceedsMarkedUsd - hodlUsd;

out.ilUsdRow = withdrawalsUsd - hodlUsd;

}

return out satisfies LineageRollup;

}Unit-Testing PnL

So far my development has been fast and frantic. I started looking at the code and realizing it was growing too much, and that the bot will need much more than expected to be fully operative with me intervening as little as possible.

So I started adding unit tests. For this one, I downloaded a position-lineage chunk from the database and crosschecked manually the pnl, so we left it as:

import type { LPFlow, LPPosition } from "@/infra/supabase/types";

import { test, expect, mock } from "bun:test";

import fs from "fs";

import path from "path";

const FLOWS_PATH = path.resolve("data/lp_position_flows.json");

const POSITION_PATH = path.resolve("data/lp_position.json");

const FLOWS: LPFlow[] = JSON.parse(fs.readFileSync(FLOWS_PATH, "utf8"));

const POS: LPPosition = JSON.parse(fs.readFileSync(POSITION_PATH, "utf8"));

// PUMP(6), SOL(9)

const DEC_X = 6;

const DEC_Y = 9;

mock.module("@/infra/supabase/SupabaseClient", () => {

class SupabaseClient {

flow = {

getFlowsByLineage: async (

userId: number,

poolAddr: string,

lineageId: string

) =>

FLOWS.filter(

(f) =>

f.user_id === userId &&

f.pool_address === poolAddr &&

f.lineage_id === lineageId

),

};

}

return { SupabaseClient };

});

test("PnL from getLineageRollup()", async () => {

const { getLineageRollup } = await import("@/metrics/pnl/lineage");

const roll = await getLineageRollup({

storage: new (

await import("@/infra/supabase/SupabaseClient")

).SupabaseClient() as any,

userId: POS.user_id,

poolAddress: POS.pool_address,

lineageId: POS.lineage_id!,

tokenX: POS.token_a_mint,

tokenY: POS.token_b_mint,

decX: DEC_X,

decY: DEC_Y,

markAUsd: 0.00366165, // current/close PUMP USD

markBUsd: 175.92244741611887, // current/close SOL USD

});

expect(roll.rows).toBe(9);

expect(roll.costBasisUsd).toBeCloseTo(5.278817234, 6);

expect(roll.realisedFeeUsd).toBeCloseTo(1.861600142, 6);

expect(roll.realisedPnlUsd).toBeCloseTo(-3.417217082, 6);

const ilDisplay = -(roll.ilUsdRow!);

expect(ilDisplay).toBeCloseTo(1.87287661, 6);

expect(roll.realisedPctOnDeposits).toBeCloseTo(

(roll.realisedPnlUsd / roll.depositsUsd) * 100,

6

);

// (purely for visibility; no assertions on this block)

const rows = FLOWS.filter((f) => f.lineage_id === POS.lineage_id)

.map((f) => {

const a_ui = Number(f.amount_a) / 10 ** DEC_X;

const b_ui = Number(f.amount_b) / 10 ** DEC_Y;

const priceA = Number(f.price_a);

const priceB = Number(f.price_b);

const usd = a_ui * priceA + b_ui * priceB;

return {

ts: f.timestamp,

type: f.event_type,

a_ui: Number.isFinite(a_ui) ? Number(a_ui.toFixed(6)) : a_ui,

b_ui: Number.isFinite(b_ui) ? Number(b_ui.toFixed(9)) : b_ui,

priceA: Number(priceA.toFixed(12)),

priceB: Number(priceB.toFixed(6)),

usd: Number(usd.toFixed(6)),

};

})

.sort((a, b) => (a.ts! < b.ts! ? -1 : 1));

// Pretty print breakdown to help eyeball sums

console.table(rows);

});Let's review the results:

$ bun test tests/pnl.test.ts

bun test v1.2.23 (cf136713)

tests/pnl.test.ts:

┌───┬────────────────────────────┬────────────┬──────────────┬─────────────┬────────────────┬────────────┬───────────┐

│ │ ts │ type │ a_ui │ b_ui │ priceA │ priceB │ usd │

├───┼────────────────────────────┼────────────┼──────────────┼─────────────┼────────────────┼────────────┼───────────┤

│ 0 │ 2025-10-11 19:18:34.242+00 │ DEPOSIT │ 0 │ 0.5 │ 0.003881488069 │ 180.525107 │ 90.262553 │

│ 1 │ 2025-10-11 20:02:12.041+00 │ WITHDRAWAL │ 23219.938468 │ 0 │ 0.003792787403 │ 177.847711 │ 88.06829 │

│ 2 │ 2025-10-11 20:02:12.199+00 │ FEE_CLAIM │ 221.804049 │ 0.003824656 │ 0.003792787403 │ 177.847711 │ 1.521462 │

│ 3 │ 2025-10-11 20:02:15.805+00 │ DEPOSIT │ 0 │ 0.5 │ 0.003792787403 │ 177.847711 │ 88.923856 │

│ 4 │ 2025-10-11 20:47:27.251+00 │ WITHDRAWAL │ 23451.290384 │ 0 │ 0.003720720496 │ 176.206405 │ 87.255697 │

│ 5 │ 2025-10-11 20:47:27.389+00 │ FEE_CLAIM │ 44.949036 │ 0.00003838 │ 0.003720720496 │ 176.206405 │ 0.174006 │

│ 6 │ 2025-10-11 20:47:31.191+00 │ DEPOSIT │ 0 │ 0.5 │ 0.003720720496 │ 176.206405 │ 88.103203 │

│ 7 │ 2025-10-11 21:02:36.855+00 │ FEE_CLAIM │ 45.397712 │ 0 │ 0.003659493266 │ 175.922447 │ 0.166133 │

│ 8 │ 2025-10-11 21:02:36.965+00 │ WITHDRAWAL │ 23688.199791 │ 0 │ 0.003659493266 │ 175.922447 │ 86.686808 │

└───┴────────────────────────────┴────────────┴──────────────┴─────────────┴────────────────┴────────────┴───────────┘

✓ PnL from getLineageRollup() [8.00ms]

1 pass

0 fail

5 expect() calls

Ran 1 test across 1 file. [57.00ms]Solving connection issues

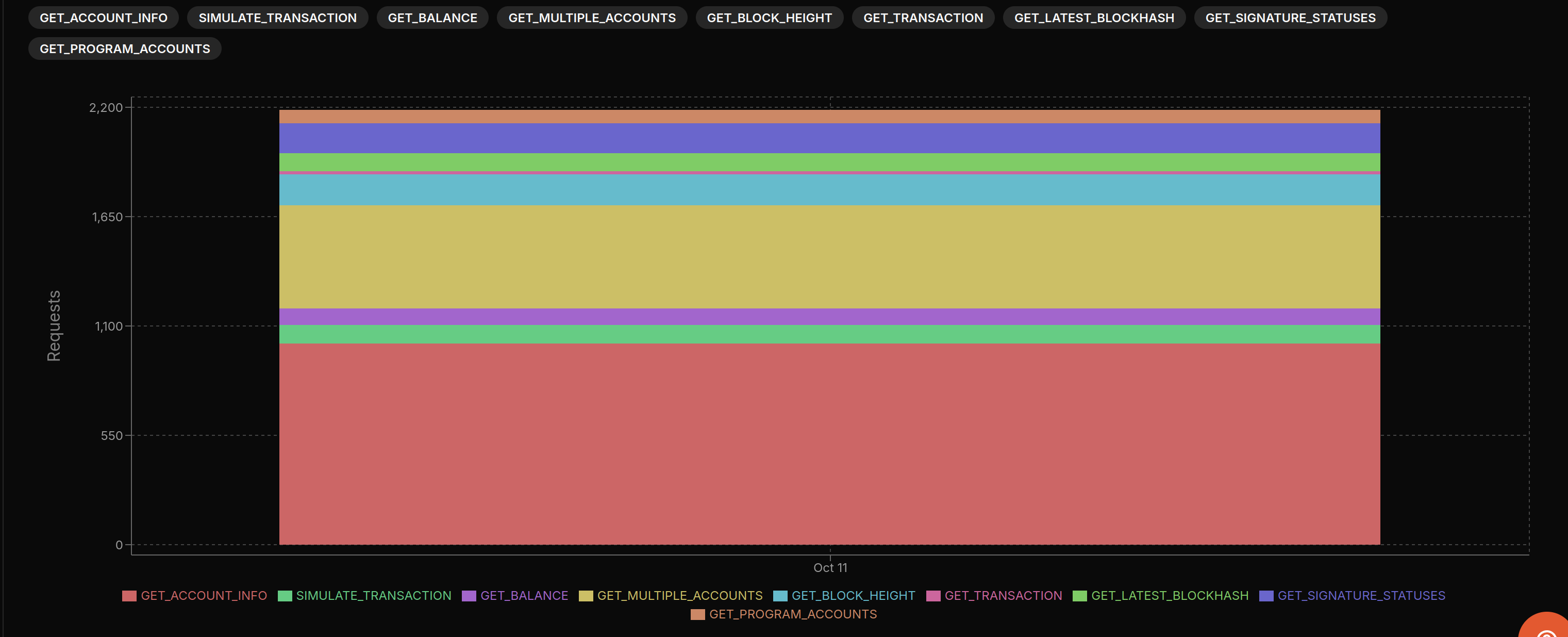

We tightened the transport layer so the bot stays healthy on free RPC tiers:

- Role-separated endpoints with round-robin rotation: distinct pools for

read,submit, andconfirm. The picker rotates to spread load and avoid per-IP throttles on a single host. - WebSocket confirmations when available; polling fallback otherwise. We auto-detect WS; if missing (e.g., free Alchemy), we fall back to efficient polling with jittered backoff.

- 429 handling & backoff on hot paths:

sendRawTransaction,getLatestBlockhash, ALTs fetch, signature status. Transient rate-limits retry with decorrelated jitter. - Blockhash freshness: we patch the blockhash right before sign/submit with

"processed"commitment to reduce expiries, confirm at"confirmed"by default. - HTTP keep-alive and connection reuse**: a shared

https.AgentwithkeepAlive+ raisedmaxSocketscuts TLS churn. - Account I/O cuts:

- Mint decimals cached (LRU + TTL) and

getMultipleAccountsInfobatched fetch to avoid doublegetAccountInfo. - Associated token balances read via single pass and only parsed if the account exists. Net effect: fewer RPC calls, fewer 429s, faster confirms.

- Mint decimals cached (LRU + TTL) and

// rpc/profile.ts — role-separated, round-robin rotation + keep-alive

const agent = new HttpsAgent({ keepAlive: true, maxSockets: 128 });

function makeConn(e: RpcEndpoint, def: Commitment): Connection {

return new Connection(e.url, {

commitment: e.commitment ?? def,

httpAgent: agent,

wsEndpoint: e.wsUrl, // ensure WS for confirm endpoints!

});

}

export class RpcProfile {

constructor(

public readonly read: Connection[],

public readonly submit: Connection[],

public readonly confirm: Connection[]

) {}

private rr = { read: 0, submit: 0, confirm: 0 } as Record<Role, number>;

pick(role: Role): Connection {

const arr = role === "read" ? this.read : role === "submit" ? this.submit : this.confirm;

const i = this.rr[role];

this.rr[role] = (this.rr[role] + 1) % arr.length;

return arr[i];

}

}// BaseExecutor.waitForConfirmation — WS first; otherwise jittered polling

async waitForConfirmation(sig: string, bh: string, lastValid: number, caller="unknown", commitment: Commitment="confirmed") {

const confirmStart = Date.now();

if (isHttpOnly(this._confirmConn)) {

await confirmWithPolling(this._confirmConn, {

signature: sig,

lastValidBlockHeight: lastValid,

pollIntervalMs: 250,

timeoutMs: 90_000,

caller,

});

txTimings.labels("confirm", caller).observe((Date.now() - confirmStart) / 1000);

return true;

}

const res = await this._confirmConn.confirmTransaction({ signature: sig, blockhash: bh, lastValidBlockHeight: lastValid }, commitment);

txTimings.labels("confirm", caller).observe((Date.now() - confirmStart) / 1000);

if (res.value.err) throw new Error(`Tx failed: ${safeStringify(res.value.err)}`);

return true;

}Meteora improvements

We had redundant functions spread across modules. This refactor consolidates them into three clear entry points: open, close, and rebalance.”

- Pass the full LPPosition from the monitor (no cache lookups).

- Refactor is centered around three files: open, close, rebalance.

- Unified “remove” and “close” via

removeOrClosePosition. - Unified “open” and “add-liquidity” via

openPositionOrAddLiquidity.

1. New refractor, new life

We were doing it in easy mode: closing the position completely and opening again with the same parameters. I rescued AddLiquidityParams and now we just remove the liquidity and use a function we had yet not used: addLiquidityByStrategy.

My MeteoraClient is getting more functional:

const DEFAULT_DLMM_API = "https://dlmm-api.meteora.ag";

export class MeteoraClient {

constructor(

private conn: Connection,

private dlmmApi: string = DEFAULT_DLMM_API

) {}

public getConn() {

return this.conn;

}

private pools = new Map<string, { dlmm: DLMM; at: number }>();

private TTL_MS = 30_000;

/** fetch (or cache) a pool */

async getPool(poolAddr: string): Promise<DLMM> {

const now = Date.now();

const c = this.pools.get(poolAddr);

if (c && now - c.at < this.TTL_MS) return c.dlmm;

const dlmm = await DLMM.create(this.conn, new PublicKey(poolAddr));

this.pools.set(poolAddr, { dlmm, at: now });

return dlmm;

}

/**

* Gets information about a user's position

* @param poolAddress Pool address

* @param userPublicKey User's public key

* @returns Position information

*/

async checkUserPositions(

poolAddress: string,

userPublicKey: string

): Promise<{ pubkey: PublicKey; data: PositionData }[]> { \* ... \* }

/**

* Creates a new position and adds liquidity using a strategy

* @param params Parameters for creating a position

* @returns Transaction for creating position

*/

async createPosition(params: CreatePositionParams): Promise<{

transaction: VersionedTransaction;

position: string;

signer: Keypair;

}> {

let minBinId = params.minBinId;

let maxBinId = params.maxBinId;

if (minBinId === maxBinId) {

throw new Error("min bin == max bin");

}

const totalXAmount = new BN(params.amountARaw.toString());

const totalYAmount = new BN(params.amountBRaw.toString());

// Build the transaction using the SDK

const newBalancePosition = new Keypair();

const pool = await this.getPool(params.poolAddress);

const legacyTx = await pool.initializePositionAndAddLiquidityByStrategy({

positionPubKey: newBalancePosition.publicKey,

user: new PublicKey(params.userPublicKey),

totalXAmount,

totalYAmount,

strategy: {

maxBinId,

minBinId,

strategyType: strategyForPlacement(params.strategy),

singleSidedX: params.singleSidedX,

},

});

legacyTx.feePayer = new PublicKey(params.userPublicKey);

return {

transaction: toVersioned(legacyTx),

position: newBalancePosition.publicKey.toBase58(),

signer: newBalancePosition,

};

}

/**

* Closes a position

* @param params Parameters for closing a position

* @returns Transaction for closing position

*/

async closePosition(

params: ClosePositionParams

): Promise<VersionedTransaction> {

const pool = await this.getPool(params.poolAddress);

const position = await pool.getPosition(

new PublicKey(params.positionPublicKey)

);

const legacyTx = await pool.closePosition({

owner: new PublicKey(params.userPublicKey),

position,

});

legacyTx.feePayer = new PublicKey(params.userPublicKey);

return toVersioned(legacyTx);

}

/**

* Adds liquidity to an existing position using a strategy

* @param params Parameters for adding liquidity

* @returns Transaction for adding liquidity

*/

async addLiquidityByStrategy(

params: AddLiquidityParams

): Promise<{ transaction: VersionedTransaction }> {

let minBinId = params.minBinId;

let maxBinId = params.maxBinId;

if (minBinId === maxBinId) {

throw new Error("min bin == max bin");

}

const totalXAmount = new BN(params.amountARaw.toString());

const totalYAmount = new BN(params.amountBRaw.toString());

// Add Liquidity to existing position

const pool = await this.getPool(params.poolAddress);

const legacyTx = await pool.addLiquidityByStrategy({

positionPubKey: new PublicKey(params.positionPubkey),

user: new PublicKey(params.userPublicKey),

totalXAmount,

totalYAmount,

strategy: {

maxBinId,

minBinId,

strategyType: strategyForPlacement(params.strategy),

singleSidedX: params.singleSidedX,

},

});

return { transaction: toVersioned(legacyTx) };

}

/**

* Removes liquidity from a position

* @param params Parameters for removing liquidity

* @returns Transaction for removing liquidity

*/

async removeLiquidity(params: RemoveLiquidityParams): Promise<Transaction[]> {

const pool = await this.getPool(params.poolAddress);

const { userPositions } = await pool.getPositionsByUserAndLbPair(

new PublicKey(params.userPublicKey)

);

const userPosition = userPositions.find(({ publicKey }) =>

publicKey.equals(new PublicKey(params.positionPublicKey))

);

if (!userPosition) {

throw new Error(

`Unable to remove liquidity position ${params.positionPublicKey}, because user don't have position!`

);

}

// Remove Liquidity

const binIdsToRemove = userPosition.positionData.positionBinData.map(

(bin) => bin.binId

);

let fromBinId = params.fromBinId;

let toBinId = params.toBinId;

let removeBps = params.removeBps;

if (!removeBps) {

removeBps = 10000;

}

if (!fromBinId || fromBinId < binIdsToRemove[0]) {

fromBinId = binIdsToRemove[0];

}

if (!toBinId || toBinId > binIdsToRemove[binIdsToRemove.length - 1]) {

toBinId = binIdsToRemove[binIdsToRemove.length - 1];

}

const result = await pool.removeLiquidity({

position: new PublicKey(params.positionPublicKey),

user: new PublicKey(params.userPublicKey),

fromBinId: fromBinId,

toBinId: toBinId,

bps: new BN(removeBps),

shouldClaimAndClose: params.shouldClaimAndClose,

});

const transactions = Array.isArray(result) ? result : [result];

return transactions.map((tx) => {

tx.feePayer = new PublicKey(params.userPublicKey);

return tx;

});

}

}2. Merging remove and close

I merged the “close with fees” and “remove only” paths:

export async function removeOrClosePosition({

solAddr,

creds,

position,

claimAndClose,

}: {

solAddr: string;

creds: SignCreds;

position: LPPosition;

claimAndClose: boolean;

}): Promise<{

txHash: string;

feeARaw: bigint;

feeBRaw: bigint;

withdrawnARaw: bigint;

withdrawnBRaw: bigint;

priceA: number;

priceB: number;

decimalsA: number;

decimalsB: number;

}> {

// mint meta, current prices, fees/liquidity (raw)

// Fees & liquidity (raw)

const feeXRaw = toBig(target.data.feeXExcludeTransferFee ?? target.data.feeX);

const feeYRaw = toBig(target.data.feeYExcludeTransferFee ?? target.data.feeY);

const liqXRaw = toBig(target.data.totalXAmount);

const liqYRaw = toBig(target.data.totalYAmount);

const hasLiquidity = liqXRaw > 0n || liqYRaw > 0n;

async function recordFeeClaimAndClose() { \* *\ };

let txHash = "";

if (hasLiquidity) {

logger.info("[closePosition] removeLiquidity + claim + close");

const txs = await meteora.removeLiquidity({

poolAddress: position.pool_address,

userPublicKey: solAddr,

positionPublicKey: position.position_pubkey,

fromBinId: target.data.lowerBinId,

toBinId: target.data.upperBinId,

removeBps: 10_000,

shouldClaimAndClose: claimAndClose,

} as RemoveLiquidityParams);

const res = await signPatchSendAll(txs, creds, { keepLegacySigs: true });

txHash = res.at(-1)?.txSignature ?? "";

if (claimAndClose) await recordFeeClaimAndClose();

await storage.flow.insertFlow({

user_id: position.user_id,

position_pubkey: position.position_pubkey,

pool_address: position.pool_address,

token_a_mint: tokenAMint,

token_b_mint: tokenBMint,

amount_a: liqXRaw.toString(),

amount_b: liqYRaw.toString(),

price_a: priceA.toString(),

price_b: priceB.toString(),

event_type: "WITHDRAWAL",

lineage_id: position.lineage_id,

});

} else {

if (!claimAndClose) {

logger.info("[removeOrClose] position already empty");

return {

txHash: "",

decimalsA,

decimalsB,

feeARaw: 0n,

feeBRaw: 0n,

withdrawnARaw: 0n,

withdrawnBRaw: 0n,

priceA,

priceB,

};

}

logger.info(

"[removeOrClose] no liquidity; closePosition (claims residual fees if any)"

);

const tx = await meteora.closePosition({

poolAddress: position.pool_address,

userPublicKey: solAddr,

positionPublicKey: position.position_pubkey,

});

const res = await meteoraSend(tx, {

signer: privySigner,

creds,

executor,

});

txHash = res.txSignature;

await recordFeeClaimAndClose();

}

return {

txHash,

feeARaw: feeXRaw,

feeBRaw: feeYRaw,

withdrawnARaw: liqXRaw,

withdrawnBRaw: liqYRaw,

priceA,

priceB,

decimalsA,

decimalsB,

};

}- Rebalance calls this with

claimAndClose=false(cheap, keeps lineage alive). - Hard exit calls with

claimAndClose=true(records fees and deletes the row).

3. Open or add liquidity: one path, two modes

- On new position →

createPosition. - On rebalance (same position) →

addLiquidityByStrategy.

export async function openPositionOrAddLiquidity({

solAddr,

creds,

poolAddress,

placement,

plan,

position,

userId,

rebalance,

openedBy,

}: {

solAddr: string;

creds: SignCreds;

poolAddress: string;

placement: LiquidityStrategy;

plan: OpenPlan;

position: LPPosition;

userId: number;

rebalance: boolean;

openedBy?: "AUTO_OPEN" | "LP_COPY" | "MANUAL" | "REBALANCE";

}): Promise<{

txSig: string;

newPosition: string;

amountX: bigint;

amountY: bigint;

}> {

const [mintForA, mintForB] = plan.flip

? [position.token_b_mint, position.token_a_mint]

: [position.token_a_mint, position.token_b_mint];

const { fundedAmountARaw, fundedAmountBRaw } = await ensureFromBaseBalances({ \* *\ });

const createPositionParams: CreatePositionParams = {

poolAddress,

userPublicKey: solAddr,

amountARaw: fundedAmountARaw,

amountBRaw: fundedAmountBRaw,

strategy: placement,

minBinId: plan.minId,

maxBinId: plan.maxId,

...(plan.singleSidedX !== undefined

? { singleSidedX: plan.singleSidedX }

: {}),

};

let positionPubkey = position.position_pubkey;

let signer: Keypair | undefined;

let transaction: VersionedTransaction;

if (rebalance) {

const rebalanceParams = {

...createPositionParams,

positionPubkey,

} as AddLiquidityParams;

logger.info(

`[openOrAddLiquidity] add liquidity params: ${safeStringify(

rebalanceParams

)}`

);

const { transaction: vTx } = await meteora.addLiquidityByStrategy(

rebalanceParams

);

transaction = vTx;

} else {

logger.info(

`[openOrAddLiquidity] create params: ${safeStringify(

createPositionParams

)}`

);

const {

transaction: vTx,

position: pKey,

signer: s,

} = await meteora.createPosition(createPositionParams);

transaction = vTx;

positionPubkey = pKey;

signer = s;

}

const { txSignature, explorerUrl } = await meteoraSend(transaction, {

signer: privySigner,

creds,

executor,

extraSigners: signer ? [signer] : undefined,

keepLegacySigs: true,

});

// etc

}Note: we fund just enough with ensureFromBaseBalances:

- Tries ExactOut first, falls back to ExactIn with headroom.

- Reserves SOL (0.2) for fees.

- Has a small tolerance instead of “infinite topup” loops.

Jupiter helpers fixes and improvements

We fixed ensureFromBaseBalances, sweepLeftoversFromClose and consolidateToAllocation:

- Now we have s seep that won’t drain our SOL.

- We keep a tiny reserver of SOL.

- We use whatever amount we received if close enough, we skip the funding loop trap.

Hard defaults we rely on:

export const WSOL_MINT = "So11111111111111111111111111111111111111112";

export const SOL_RESERVE_SOL = 0.2; // always keep 0.2 SOL

export const HEADROOM_BPS = 300; // +3% budget on quotes for ExactIn fallback

export const TOLERANCE_BPS = 50; // accept up to 0.5% shortfall and shrinkExerps:

- Funding & swaps that don’t shoot our feet

ensureFromBaseBalances (fund from USDC; keep SOL for fees)

- Try ExactOut (precise deficits).

- Fallback to ExactIn with headroom.

- Keep 0.2 SOL.

- Tolerance instead of infinite top-ups (if off by dust, we shrink the request).

We also apply a tolerance clamp: if post-swap we’re short by dust, we shrink the request instead of looping swaps.

// ensureFromBaseBalances.ts (highlights)

const SOL_RESERVE = BigInt(0.2 * 1e9); // 0.2 SOL

// ...

// after swaps: if a tiny short remains, shrink the requested amounts to what we have, not another swap loop

return { fundedAmountARaw: Math.min(nowA, amountARaw), fundedAmountBRaw: Math.min(nowB, amountBRaw) };

- Sweep leftover after closing a position:

The swap only spends the credits from this close (withdrawn + claimed fees). It never touches unrelated wallet balances.

// sweepLeftoversFromClose.ts (core idea)

export async function sweepLeftoversFromClose({

executor, privySigner, creds, supabase, wallet, sinkMint, credits, userId, minUsd = 2,

}: {

credits: Record<string, bigint>; // {mint -> raw amount} from THIS close (withdrawn + fees)

// ...

}) {

const prices = await getPricesUsd([sinkMint, ...Object.keys(credits)]);

for (const [mint, raw] of Object.entries(credits)) {

if (mint === sinkMint) continue;

const ui = Number(raw) / 10 ** (await getMintDecimals(executor.readConnection, new PublicKey(mint)));

const px = prices[normalize(mint)] ?? 0;

if (ui * px < minUsd) continue; // dust

await performJupiterSwapExactIn({ inputMint: mint, outputMint: sinkMint, amountInRaw: raw, /* … */ });

}

}This is called only on close. On rebalance we skip sweeping (we still need the assets to reopen).

- Consolidate to allocation (rebalance only):

We remove liquidity, but the amounts you got back rarely match the desired A/B for the new band. So we add a pre-open consolidation step:

- Does at most two swaps.

- Keeps 0.2 SOL.

- Never spends more than what this rebalance produced (credits!).

// consolidateToAllocation.ts (critical clamps)

await consolidateToAllocation({

executor, wallet: solAddr, creds, privySigner, supabase: storage, userId: position.user_id,

mintA: position.token_a_mint, mintB: position.token_b_mint, decA, decB,

desiredA_ui, desiredB_ui,

creditsARaw: withdrawnARaw, // only what we just got back

creditsBRaw: withdrawnBRaw,

});Consolidation is credit-bounded (limited to the tokens just withdrawn) and SOL-aware (keeps 0.2 SOL for fees).

Simplified monitor implementation

The monitor uses getLineageRollup as its single source of truth; the Telegram renderer shows the exact figures the test asserts.

const ledger = await getLineageRollup({

storage,

userId: Number(p.user_id),

poolAddress: p.pool_address,

lineageId: p.lineage_id!,

tokenX, tokenY, decX, decY,

markAUsd: priceX,

markBUsd: priceY,

});

// Rendered PnL in the Telegram message:

const includeUnclaimed = cfg.pnl.includeUnclaimedFees ?? true;

const inventoryUsd = snap.liq_usd;

const unclaimedUsd = snap.fee_usd;

const claimedUsd = ledger.realisedFeeUsd;

const totalPnlUsd = inventoryUsd + claimedUsd + (includeUnclaimed ? unclaimedUsd : 0) - ledger.costBasisUsd;

const totalPnlPct = ledger.costBasisUsd > 0 ? (totalPnlUsd / ledger.costBasisUsd) * 100 : 0;We also delegated the impleentation in dedicated files, letting monitor's index.ts lean and light.

Everything feels more manageable now, and we fixed many little issues that arose last night when we were testing live different pools at the same time.

Next wave of tests

We now started testing different positions at the same time.These runs will also exercise the range caps (avoid DLMM realloc errors), funding headroom, and lineage rollup under both single-sided and symmetric regimes.

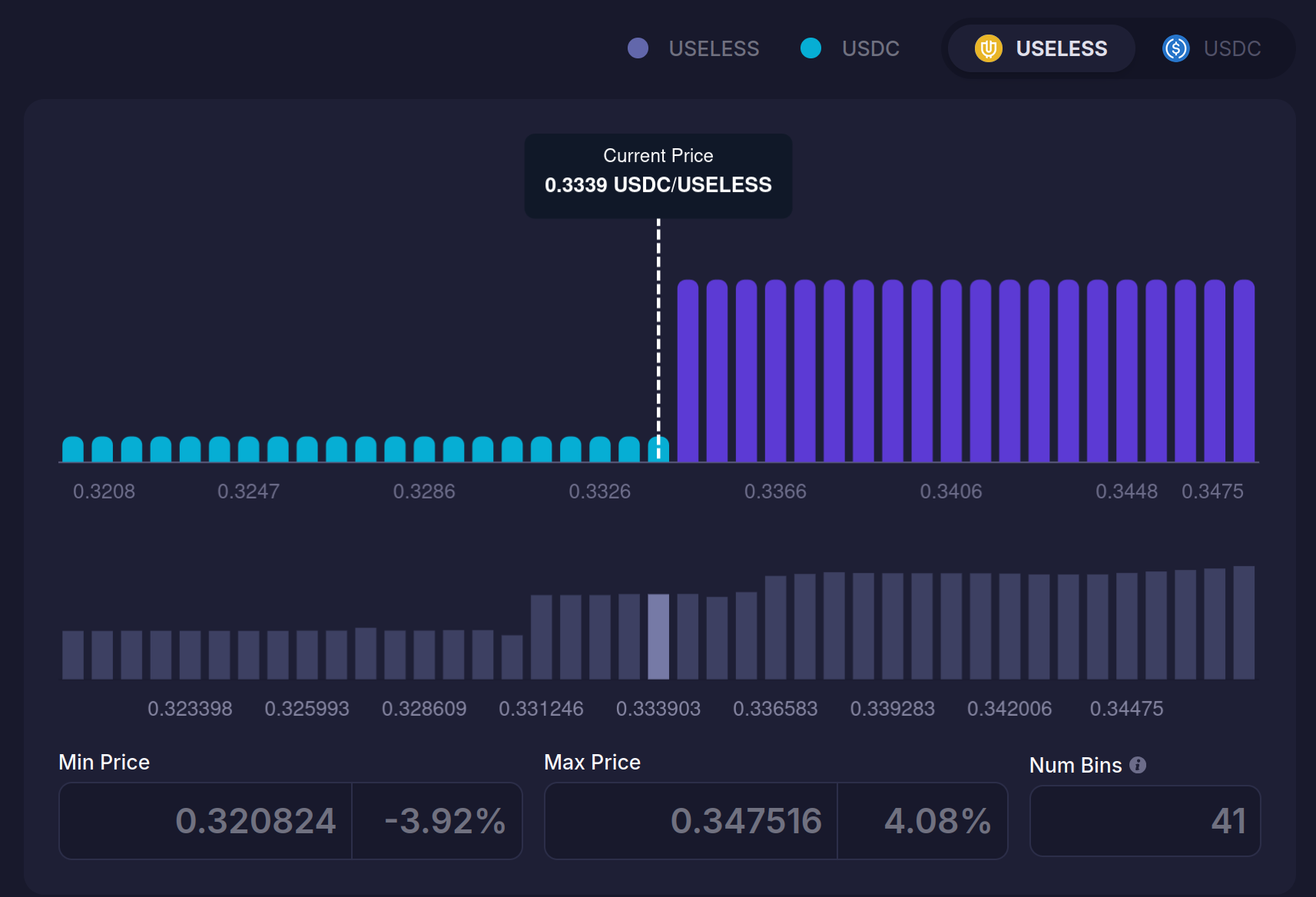

For tonight, we let these for a few hours:

--pool 9Ux4vtd8juEH4NMF4ae3tXYpKBdeCUUipX8z3EficKme --tokenA Dz9mQ9NzkBcCsuGPFJ3r1bS4wgqKMHBPiVuniW8Mbonk --tokenB EPjFWdd5AufqSSqeM2qN1xzybapC8G4wEGGkZwyTDt1v --amountA 100 --amountB 5 --side 0 --placement 1 --interval 40

--pool HbjYfcWZBjCBYTJpZkLGxqArVmZVu3mQcRudb6Wg1sVh --tokenA pumpCmXqMfrsAkQ5r49WcJnRayYRqmXz6ae8H7H9Dfn --tokenB So11111111111111111111111111111111111111112 --amountA 10000 --amountB 0.1 --side 0 --placement 3 --interval 40

--pool 8ztFxjFPfVUtEf4SLSapcFj8GW2dxyUA9no2bLPq7H7V --tokenA Dz9mQ9NzkBcCsuGPFJ3r1bS4wgqKMHBPiVuniW8Mbonk --tokenB So11111111111111111111111111111111111111112 --amountA 0 --amountB 0.25 --side 0 --placement 2 --interval 60

Let's keep building! In the next chapters, I will finish polishing meteora implementations to make it work with all sorts of strategies, and adding into the automated flow the creation of pools.

Stay Updated

Get notified when I publish new articles about Web3 development, hackathon experiences, and cryptography insights.

You might also like

DeFi Bots Series — Part 7: The Monitor Test Saga (One-Sided Bids, Skew Rebalances, and Real PnL)

I stress-tested a one-sided, USDC-anchored LP strategy overnight: ~15 rebalances, lots of fee accrual, a few bugs, and a clearer picture of what to fix next. We tightened pool orientation, made SOL/WSOL funding sane, added skew gates (TVL/fees), and wrote proper lineage + flows. The monitor is quieter—until it needs not to be.

DeFi Bots Series — Part 6: Base-Funded Opens and Sweeps, Clean PnL, and a Quiet (Smarter) Monitor

I moved position funding and settlements to a USDC base, fixed a sneaky PnL bug (price/mint orientation), taught the monitor to chill (cooldown + “in-range = HOLD”), and battle-tested open/close scripts with ledgered flows. It’s finally… boring—in the good way.

DeFi Bots Series — Part 5: Live Rebalance on Meteora DLMM (RPC Profiles, Clean PnL & One-Sided Liquidity)

I rewired RPC handling with role-based profiles, unified LP strategy controls, fixed PnL accounting, and executed a live one-sided rebalance on a PUMP/USDC DLMM pool over gRPC—end to end with Supabase ledgering.